Learning Rust and building a small photo catalogue

Introduction #

It's always good to learn new programming languages - especially even when Werner Vogels is making time for these also. I decided to learn Rust on a toy example that combines three things I like doing: Photography, basic data science, and overengineering for the purpose of learning 😊

The source code for what I have built is available here: https://github.com/pkrusche/photocat

The task #

Here is the task I set out to achieve:

- Retrieve metadata from a set of photos on the file system (such as date taken, lens / camera used etc.),

- put these into a database indexed by filename and sha256 and do some basic cleaning,

- produce ASCII visuals to quickly summarize and learn things about my pictures - for example, when were they taken, or which focal lengths (and lenses) were used.

Practically, aside from now knowing a bit of Rust, I can carry fewer things the next time, (maybe) improve my picture-taking, and sell things I don't use anymore - all in a data-driven manner.

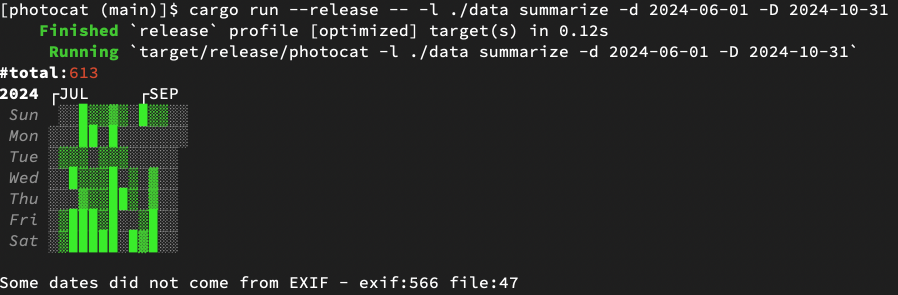

(1) When did I take pictures? #

A nice visual for when pictures were taken is a Github-style contributions graph. Ideally the dates should come from EXIF (not from the file, which would only tell me when I downloaded / processed it) - but e.g. in a Google Takeout export they might have gotten lost depending on the path the image took getting there (e.g. if it was uploaded through a messaging app that strips metadata). Photocat warns about this and uses the file date instead in that scenario. Unsurprisingly I take most pictures on weekends and public holidays.

photocat -l <path-to-database> summarize -d <start-date> -D <end-date>

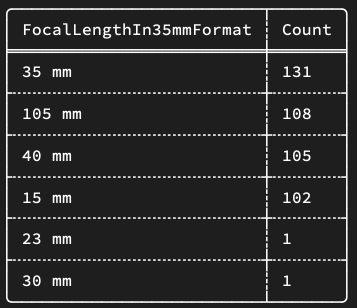

(2) What did I use to take pictures? #

I do prefer lenses with a fixed focal length - but which ones to keep and bring? Part of it is what I'll be taking pictures of - but also I am differently consistent in getting keepers with different lenses. Here we query just an overall summary over all pictures - but we can also look at a "keeper rate" by querying and comparing processed and selected to raw photo counts. Or maybe answer the question of whether my next phone really needs a tele lens.

Photocat can print a nice looking ASCII table to the terminal or export a CSV file.

photocat -l <path-to-database> summarize \

--summary-options 'count:FocalLengthIn35mmFormat'`

=> ...

The tool #

Here are some notes on my Rust learning experience.

Installing Rust #

This was actually the first thing that somewhat tripped me up. The apparent preferred way to install Rust is by sourcing a script from a website (ugh). There's a helpful summary post on how to install Rust here: https://www.legendu.net/en/blog/install-rust-globally/ - but I'd say there is room for improvement in the overall process. While it is possible to install Rust without sourcing the script (well, the official pkg gets quarantined, but this isn't unfixable), it leads to issues with Rust-analyzer not working properly (both on Mac and Linux, for me) - either missing the Rust source code or something about proc-macro-servers... leading to various workarounds. Developing in a container may well be the way to go in the future.

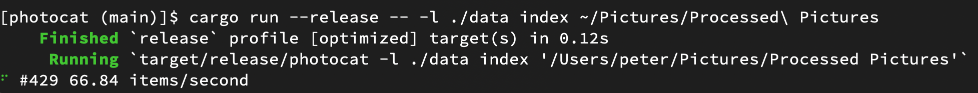

Indexing files #

The first thing to build was a crawler that would read EXIF metadata from every image in a set of paths. I do organize my files in folders locally but actually a lot of my pictures live on Google Photos albums (specifically the ones from my phone). There are various ways to automate getting them via APIs but in the end I opted for a simple solution: Pick up metadata from an album download from Google Photos, or from one of my regular Google takeout downloads. This was a nice way to learn general control structures and concurrency as well as multi-threading in Rust.

photocat -l <path-to-database> index <path-to-pictures>

Indexing does two things:

-

Compute a SHA256 sum of the image file, which serves as its key in the database. This means that when a file gets updated & overwritten we can infer version information (query all matching paths with different SHA sum and sort by date).

-

Run ExifTool on each file (it supports all file formats I encountered in my Takeout albums & local folders, including HEIC and RAW / NEF), extract some values from its JSON output, put them into the database.

It does all these things by having a single producer multiple consumer mechanism that enumerates files sequentially and asynchronously executes IO in parallel. I think doing so looks pretty neat in Rust - and it's also easy to add a nice progress indicator on the command line (the package ecosystem is great!).

Writing code #

I use VS Code & Rust-analyzer. This works really well - in fact I'd say it's smoother than most other languages I work with regularly (R and Python, and formerly also C/C++/many others). Also nice: Built-in code formatting and package management. I wish this was as easy with C++. A fun fact is that ChatGPT and friends are not yet great at writing code in Rust (probably they'd do better as an agent with access to Rust-analyzer). Testing and debugging also works well, and it is nice that there seems to (largely) be one way to do it that is actually built in.

Binaries and runtime #

Things I like:

-

Linking is static by default. There are many opinions out there about this, mine is that being able to ship a single file that just works is pretty awesome.

-

Binaries and memory footprint are small. Rust is one of the "greenest" programming languages according to this study: https://sites.google.com/view/energy-efficiency-languages/. There are a few caveats to the assessment but it's very satisfying to have something that doesn't eat RAM and efficiently utilizes compute nonetheless.

Conclusion #

Will I write all my code in Rust from now on? Probably not - but for producing binaries that can compile and run on many systems it is definitely a nice choice. I might try my next Advent of Code with it.